This blog has moved! Please update your links

This blog has moved! Please update your links!

My new website is jshopf.com and the levelofdetail blog is now located at jshopf.com/blog.

There’s a new post there about the first day of the I3D 2009 conference, so go there and read it. And update your RSS feeds!

Imperfect Shadow Maps

“Imperfect Shadow Maps for Efficient Computation of Indirect Illumination” by Ritschel et al., a real-time indirect lighting can be summarized as follows: it solves the visibility problem present in the paper “Splatting Indirect Illumination” by Dachsbacher and Stamminger.

The splatting indirect illumination method works by rendering what the authors call a reflective shadow map. A RSM is a collection of images that capture information of surfaces visible from a light source. The RSM is then sampled to choose surfaces that will be used as Virtual Point Lights. Indirect lighting is then calculated as the sum of the direct lighting contribution of these VPLs. The idea of approximating radiosity with point lights was first described in the paper Instant Radiosity. In order to light the scene with each VPL, the method performs deferred shading by rendering some proxy geometry that bounds the influence of the light and effectively splats the illumination from that (indirect) light onto the scene.

The problem with this method is that the illumination is splatted onto the scene without any information about the visibility of that VPL. The surface being splatted upon could be completely obscured by an occluder, but would receive the full amount of bounced lighting. What you would really need here is a shadow map rendered for each VPL. But in order to get good indirect illumination you need hundreds or thousands of VPLs, which requires hundreds or thousands of shadow maps. Let’s face it, that ain’t happenin’ in real-time. First of all, you’d have to render your scene X number of times, which means you’d have to limit the complexity of your scene or use some kind of adaptive technique like progressive meshes. But on top of that you’d have X number of draw calls, which have their own amount of overhead.

So what Imperfect Shadow Maps does is figure out a way to render hundreds or thousands of shadow maps in one draw call and with dramatically reduced amounts of geometry.

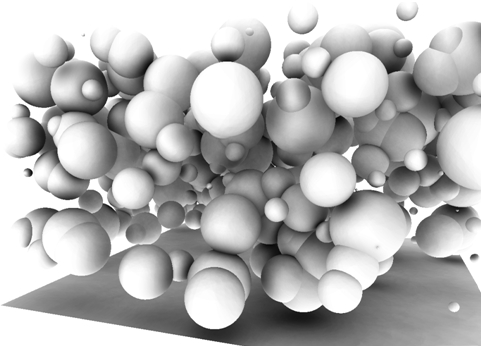

The paper achieves this by rendering 1024 paraboloid shadow maps of a sparse point representation of the scene. During preprocessing, many points are distributed uniformly across the scene. Then, n sets of ~8k points are constructed, where n is the number of VPLs the algorithm will use at run-time. The number 8k is not mentioned in the paper but the author stated this number in his SIGGRAPH Asia presentation. The points in these sets are chosen randomly. At run-time, each of the n sets of points are rendered to its respective paraboloid depth map.

Ok, you’re rendering a bunch of sparse points to a low-res (128×128 or less) shadow map. As you may suspect, it’s going to look like garbage:

It’s a Cornell box, can’t you tell?

The authors get clever here and use pull-push upsampling to fill holes between the points, being smart and using some thresholding to make sure they dont fill holes around depth discontinuties. Anyway, after the holes are filled the shadow maps still kind of look bad:

But it doesn’t matter so much because the indirect illumination is smooth and you’re going to adding the contribution of hundreds of these things at each pixel, so the incorrect visibility of each individual VPL gets smoothed out in the end.

That’s the basic idea.

The authors present some other cool things in the paper, like how to adaptively choose VPLs from the RSMs, and they also use the trick from “Non-interleaved Deferred Shading of Interleaved Sample Patterns” (talked about here) and only process a subset of the VPLs at each pixel.

Also, there is a paper that just got accepted to I3D called “Multiresolution Splatting for Indirect Illumination” by Nichols and Wyman that is a perfect fit for this paper. I’ll probably post a bit about that tomorrow.

Imperfect Shadow Maps for Efficient Computation of Indirect Illumination

Tobias Ritschel, Thorsten Grosch, Min H. Kim, Hans-Peter Seidel, Carsten Dachsbacher, Jan Kautz ACM Trans. on Graphics (Proceedings SIGGRAPH Asia 2008), 27(5), 2008.

Splatting indirect illumination

Dachsbacher, C. and Stamminger, M. 2006. In Proceedings of the 2006 Symposium on interactive 3D Graphics and Games (Redwood City, California, March 14 – 17, 2006). I3D ’06. ACM, New York, NY, 93-100.

Let’s Have a Min/Max Party

Today I was waiting for a session to begin at SIGGRAPH ASIA and began to think about how there are several cool papers that exploit min/max images. A min/max image is an image pyramid that is sort of like a quadtree. The bottom level of the hierarchy is the original image while the elements in each subsequent level of the hierarchy contain the minimum and maximum of four elements in the previous level. So it’s sort of like a mip map, but instead of averaging values, you store the min and max of the previous level. This min/max hierarchy can be generated quickly in log n passes but can be used for making conservative estimations for large regions of your image. Refer to the following papers:

Maximum Mipmaps for Fast, Accurate, and Scalable Dynamic Height Field Rendering by A. Tevs, I. Ihrke, H.-P. Seidel

– Uses min/max maps to ray trace height fields. I feel like this idea has been around for ages but here it is all packaged up with a neat little bow.

Fast GPU Ray Tracing of Dynamic Meshes using Geometry Images by Nathan Carr, Jared Hoberock, Keenan Crane, John C. Hart.

– Uses min/max hierarchies of Geometry Images to accelerate the ray tracing of meshes.

Real-time Soft Shadow Mapping by Backprojection by Gaël Guennebaud, Loïc Barthe, Mathias Paulin

High-Quality Adaptive Soft Shadow Mapping by Gaël Guennebaud, Loïc Barthe, Mathias Paulin

– I’ve ranted about these papers before. These works generate min/max hierarchies of shadow camera depth images to perform efficient blocker searches for soft shadow rendering, and also to determine penumbra regions for further optimization.

March of the Froblins SIGGRAPH course notes by Jeremy Shopf, Joshua Barczak, Christopher Oat, Natalya Tatarchuk

– Used a min/max hierarchy of the depth buffer to occlusion cull agents in our crowd simulation. Technically this only used the max portion of the hierarchy, but I didn’t want to title this Let’s have a Min/Max Party (Min is Optional).

Anyway, I think it’s kind of neat. I’m going to make another post tomorrow night about an awesome paper that’s here at the conference but I don’t want to write about it until I have a chance to clear up some nebulous parts of the paper with the author.

In other news, I received official word that the GDC lecture I proposed was accepted so I guess I will be seeing some of you in San Francisco next year in March. I’m excited about this talk because it came directly out of a post on this blog. Turns out this isn’t a waste of time after all!

Pixel-Correct Shadow Maps with Temporal Reprojection …

.. and Shadow Test Confidence!

This paper is in the running for both the longest graphics paper title and neatest lil’ shadow rendering method in recent memory. It’s pretty cool in it’s own right but I’ve had a special affection for papers that exploit temporal coherence as of late. I’d like to implement this in the near future (wouldn’t think it would take much longer than writing two blog posts.. why do I do this again?!) to see how usable it is in practice.

So.. the basic idea here is that each rasterization of the scene used for a shadow map provides a limited amount of information about occluders from the view point of the light (due to its discrete nature). This is the source of spatial aliasing (blockiness). However, rasterizations of the scene over several frames provides much more information. This paper exploits this fact to generate pixel-correct shadows by “honing in” on the correct answer over many frames and relying on the human eye’s inability to adapt quickly to notice this adaptive process. This method is lightweight, simple and should fit right into an existing rendering pipeline.

Here are the salient points of the paper:

– The method uses a screen-space history buffer that maintains information about per-pixel visibility over the past few frames. This is similar to the reprojection cache (another awesome temporally-exploitive paper, links below).

– Algorithm consists of four steps:

1) Calculate current frame’s per-pixel visibility using traditional shadow mapping.

2) Transform each pixel to the history buffer by transforming the pixel’s position using the transformation matrices of the camera in the current and previous frame.

3) Update the history buffer using this frame’s visibility test results.

4) Shadow scene with updated history buffer.

– The history buffer is updated with exponential smoothing according to some confidence value that describes how close the sample is to a correct visibility result.

– The confidence value is calculated as the distance between a pixel’s position in shadow map space and the closest shadow map texel center. This makes sense.. a scene position that maps exactly onto a shadow map texel has a correct visibility test result.

– The shadow map must contain different rasterizations of the scene over time or no new information is added to the system. This is achieved by sub-pixel jittering in both translation and rotation of the shadow camera.

READ!

Daniel Scherzer, Stefan Jeschke, Michael Wimmer. Pixel-Correct Shadow Maps with Temporal Reprojection and Shadow Test Confidence. In Rendering Techniques 2007 (Proceedings Eurographics Symposium on Rendering).

P. Sitthi-amorn, J. Lawrence, L. Yang, P. V. Sander, D. Nehab. An Improved Shading Cache for Modern GPUs. ACM SIGGRAPH Symposium on Graphics Hardware 2008.

D. Nehab, P. V. Sander, J. Lawrence, N. Tatarchuk, J. Isidoro. Accelerating Real-Time Shading with Reverse Reprojection Caching. ACM SIGGRAPH Symposium on Graphics Hardware 2007.

What’s going on here?!

I haven’t been posting much lately. What I have been posting has been other people’s stuff rather than any original thoughts. I’ve been pursuing several different topics but I can’t post about them because they may end up being used at work. Anyway, if you’re looking for lots of interesting graphics links, you should start/keep reading the Real-time Rendering blog. You should read the Real-time Rendering book too (though I haven’t got a copy myself, yet). From here on out I think I’m just going to stick to posting things that I am doing myself and content related to it.

Screenshot of a real-time technique described in an article I wrote to be published in ShaderX7

Edit: I should add that the title “What’s going on here?!” is referring to what’s been going on with the blog. It’s not a request for people to guess what is going on in the picture (but you can do that too).

Iñigo Quílez explains Slisesix demo

Iñigo Quílez posted a recent presentation on the techniques used in the “Slisesix” rgba demo. By procedurally generating a distance field representation of the scene, a fast, space-skipping raycast can be performed. Using a distance field representation allows other tricks such as fast AO and soft shadow techniques because information such as the distance and direction of the closest occluders are implicit in the representation. Alex Evans talked about using this type of technique ( see Just Blur and Add Noise) but in his proposed implementation the distance field came from rasterizing geometry into slices and blurring outward.

Presentation: Rendering Worlds with Two Triangles

Fantasy Lab releases Radium SDK

Fantasy Lab, the game development studio started by Mike Bunnell (formerly of NVIDIA), has updated their website with information about their new real-time global illumination SDK called Radium. You’ll remember Fantasy Lab as the company that released the Rhinork GI demo and announced the game Danger Planet (covered here). The description boasts infinite bounces of light. Assuming that they are using the same disc-based transfer approached used in Bunnell’s GPU Gems 2 article (also check out the recent Pixar paper Point-Based Approximate Color Bleeding), I think this means that any number of bounces can be calculated as each bounce is an iteration in that algorithm. I’d definitely like to hear more details but I suppose that is unlikely to happen as they are trying to make a living 🙂

Game Computing Applications Group @ SIGGRAPH

The group I work in at AMD is involved in a few presentations at SIGGRAPH this year. First, Chris Oat and Natalya Tatarchuk will be presenting a talk in the Advances in Real-Time Rendering in 3D Graphics and Games course on Monday on our latest demo “Froblins”. This talk will cover using the GPU and DirectX 10.1 for scene management, occlusion culling, terrain tessellation, approximations to global illumination, character tessellation, and crowd simulation.

I will be presenting a shorter talk on Thursday from 4:15-4:45 in the Beyond Programmable Shading: In Action course, focusing on how we used the GPU to do some general computing in the Froblins demo to allow our Froblin characters to navigate around the world and avoid each other. My addition to this course was a bit on short notice so I am fairly nervous, but how could I pass up an opportunity to speak at SIGGRAPH? I must say I am very jealous of my co-workers who will be done with all presenting duties on the first day of the conference!

I am always eager to meet new people so please feel free to introduce yourself if you will be in attendance!

Update: Here’s the link to the chapter from the course notes on our Froblins demo: March of the Froblins: Simulation and Rendering Massive Crowds of Intelligent and Detailed Creatures on GPU

Tessellation of Displaced Subdivision Surfaces in DX11

NVIDIA posted Ignacio Castaño’s DX11 tessellation talk from GameFest. Though DX11 doesn’t seem to be as much of a forward leap as DX10 was, tessellation and compute shaders are certainly significant additions to the API. Castaño discusses an implementation of Loop and Schaefer’s approximation to Catmull-Clark subdivision surfaces as described in “Approximating Catmull-Clark Subdivision Surfaces with Bicubic Patches“. He additionally describes using displacement mapping with vector displacements and discusses techniques for sampling the displacement map that ensure a watertight mesh.

Building Details in a Pixel Shader

Humus recently posted a new demo. He renders the interior of rooms visible from the exterior using cube maps. This eliminates the need for additional geometry to represent the interior. With D3D10.1, cube map arrays are used. This allows different cube maps to be selected dynamically in the shader to add variety to the rooms rendered.

It seems to me that you could additionally transform the vector used to fetch from the cubemaps to provide a random rotation around the vertical axis to provide further variation between rooms.