Iñigo Quílez explains Slisesix demo

Iñigo Quílez posted a recent presentation on the techniques used in the “Slisesix” rgba demo. By procedurally generating a distance field representation of the scene, a fast, space-skipping raycast can be performed. Using a distance field representation allows other tricks such as fast AO and soft shadow techniques because information such as the distance and direction of the closest occluders are implicit in the representation. Alex Evans talked about using this type of technique ( see Just Blur and Add Noise) but in his proposed implementation the distance field came from rasterizing geometry into slices and blurring outward.

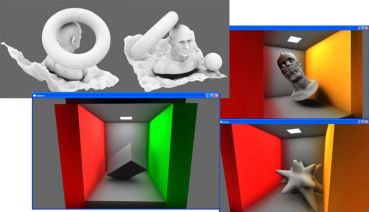

Presentation: Rendering Worlds with Two Triangles

Game Computing Applications Group @ SIGGRAPH

The group I work in at AMD is involved in a few presentations at SIGGRAPH this year. First, Chris Oat and Natalya Tatarchuk will be presenting a talk in the Advances in Real-Time Rendering in 3D Graphics and Games course on Monday on our latest demo “Froblins”. This talk will cover using the GPU and DirectX 10.1 for scene management, occlusion culling, terrain tessellation, approximations to global illumination, character tessellation, and crowd simulation.

I will be presenting a shorter talk on Thursday from 4:15-4:45 in the Beyond Programmable Shading: In Action course, focusing on how we used the GPU to do some general computing in the Froblins demo to allow our Froblin characters to navigate around the world and avoid each other. My addition to this course was a bit on short notice so I am fairly nervous, but how could I pass up an opportunity to speak at SIGGRAPH? I must say I am very jealous of my co-workers who will be done with all presenting duties on the first day of the conference!

I am always eager to meet new people so please feel free to introduce yourself if you will be in attendance!

Update: Here’s the link to the chapter from the course notes on our Froblins demo: March of the Froblins: Simulation and Rendering Massive Crowds of Intelligent and Detailed Creatures on GPU

Tessellation of Displaced Subdivision Surfaces in DX11

NVIDIA posted Ignacio Castaño’s DX11 tessellation talk from GameFest. Though DX11 doesn’t seem to be as much of a forward leap as DX10 was, tessellation and compute shaders are certainly significant additions to the API. Castaño discusses an implementation of Loop and Schaefer’s approximation to Catmull-Clark subdivision surfaces as described in “Approximating Catmull-Clark Subdivision Surfaces with Bicubic Patches“. He additionally describes using displacement mapping with vector displacements and discusses techniques for sampling the displacement map that ensure a watertight mesh.

Larrabee paper and articles

Amidst a flurry of articles from technical websites, Intel also released the paper (non-ACM link) on the Larrabee architecture that will be presented at SIGGRAPH next week.

Articles discussing some details that were released in a presentation by Larry Siler:

http://www.pcper.com/article.php?aid=602

http://techgage.com/article/intel_opens_up_about_larrabee

http://www.hexus.net/content/item.php?item=14757

Graphics Hardware 2008 Slides

Graphics Hardware 2008 organizers have begun to post slides from the conference here. I don’t think the papers are online yet and the proceedings didn’t come with a DVD this year so I guess everyone will just have to wait 🙂

Horizon Split Ambient Occlusion

I have so much I want to write about what I saw at I3D and GDC that sitting down and doing it seems daunting. I will try and post my thoughts over the next few days.

One interesting poster at I3D (and also a short talk at GDC) was an extension to SSAO. The idea was to calculate a piece-wise linear approximation of the horizon ala horizon mapping. This is achieved by sampling the depth values along m steps ( they used 8 ) in n equally spaced directions (8 again) in the tangent frame. At each depth sample, you update the horizon value if the current depth sample is higher than the current estimation of the horizon in that direction. Sampling in this fashion reduces over-occlusion. This is actually very similar to the approximation to AO that Dachsbacher and Tatarchuk described in their poster at I3D last year, “Prism Parallax Occlusion Mapping with Accurate Silhouette Generation“. All of the authors from the poster are NVIDIA guys and they announced that they will release a whitepaper describing their method.

Presentations from Gamefest 2007

Slides and audio from Microsoft’s Gamefest 2007. The presentations cover a gamut of topics.. from art, audio, graphics and researchy-type-stuff from MSR. Check it out here.

Volumetric particle lighting

At SIGGRAPH this year, there was a talk by the AMD/ATI Demo Team about the Ruby:Whiteout demo. It was disappointingly attended but it was filled to the brim with GPU tips and tricks, especially in the lighting department. This stuff hasn’t been presented anywhere else and I haven’t seen much discussion on the web so I decided to highlight a few of the key topics.

One of the really impressive subjects covered was volumetric lighting (w.r.t. particle and hair). Modeling light interaction with participating media is a notoriously difficult problem (see subsurface scattering, volumetric shadows/light shafts) and many surface approximations have been found. However, dealing with a heterogeneous volume of varying density, such as the case with a cloud of particles or hair, is still daunting. The method involves finding the distance between the first surface seen from the viewpoint of the light and the exit surface (the thickness), and also accumulating the particle density between those surfaces. Depending on how you decide to handle calculating this thickness and particle density, it could take two passes. They present a method for calculating this in one pass.

By outputting z in the red channel, 1-z in the green channel and particle density in alpha, setting the RGB blend mode to min and the alpha blend mode to additive and rendering all particles from the viewpoint of the light, you get the thickness and density in one pass. This same method can be applied to meshes such as a hair. It should be noted that this information can also be used to cast shadows onto external objects.

The presenters also discuss a few other tricks. These include rendering depth offsets on the particles and blurring the composited depth before performing the thickness calculation discussed above to remove discontinuities. Also, for handling shadows from non-particle objects, they suggest using standard shadow mapping per-vertex on the particles. I think I originally saw this idea mentioned by Lutz Latta in one of his particle system articles or presentations.

I might dredge some other topics from the presentation later on, but eveyone should check out the slides here.

Just Blur and Add Noise

All of the hoopla regarding the game LittleBigPlanet has reminded me of the excellent talk that Alex Evans, co-founder of game creator Media Molecule, gave last year at the Advanced Real-time Rendering for 3D Games course at SIGGRAPH 2006. There are good presentation slides in PDF form at the ATI Developer site: here. If you look at the slide pictures you can see early renders of LittleBigPlanet characters, with unfortunate spheres places on their heads so as not to spoil the game (this was about nine months before the game was announced).

Anyway, he discusses a few techniques that he tried in achieving global illumination-like effects in a “small world” type of environment, all of which were implemented on a Radeon mobility 9800; nothing is too high tech.

Carmack’s Virtualized Textures

John Carmack from id recently brought his virtualized texture technology back into the spotlight at QuakeCon 2007 (videos 1 2 3). Personally I think the concept of rolling your own texture virtualization and swapping in and out 80+GB of textures is immensely interesting. Especially when you factor in ideas like only fetching lower mip levels when you’re moving quickly and applying motion blur. There is a thread on gamedev.net right now that has lots of speculation and interesting ideas regarding how exactly to go about doing such a thing.

Also, the same thread linked to an interesting paper (“Unified Texture Management for Arbitrary Meshes”) from 2004 exploring some similar ideas.